Last month a video went viral on social media that showed the Speaker of the House apparently slurring her words and seeming impaired. By the time the video made the rounds, it had more than 2.5 million views on Facebook. However, it was a doctored video – just another example of a so-called “deepfake.”

What is a Deepfake – and are they really new?

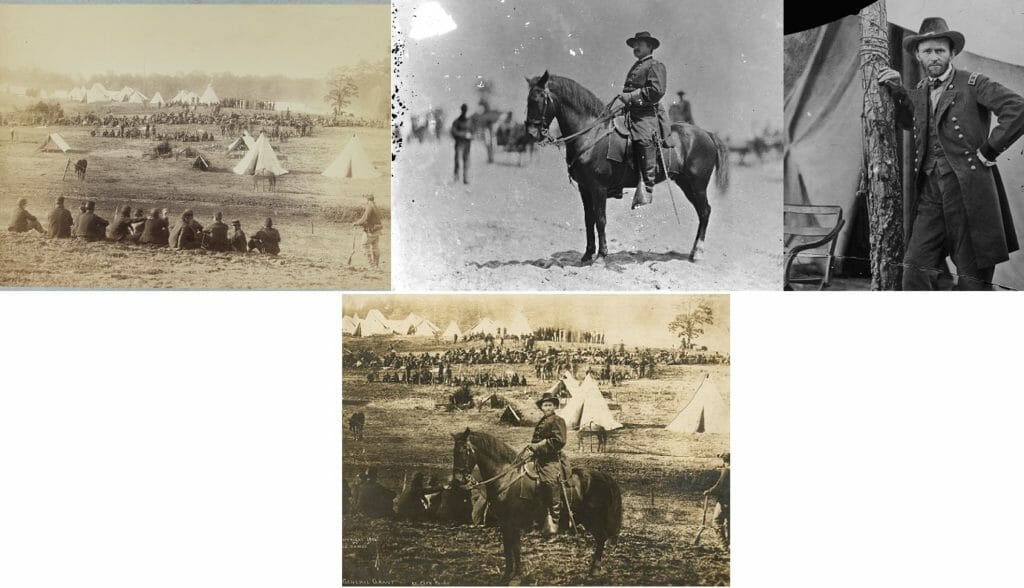

The term deepfake was first coined in 2017 by an anonymous Reddit user who shared a manipulated video of an ex-girlfriend, but in just two years it has become a very real problem. The ability to manipulate photos has existed practically since the medium was created – and one of the most infamous examples is of then-President General Ulysses S. Grant sitting atop a horse.

That photo was an early “mash-up” that incorporated Grant’s head, the body of another general on a horse, and a Confederate POW camp (see below). The goal was to make the general seem more heroic.

A+B+C=Deepfake

Photo manipulation has been used for years as a propaganda tool – and it was something mastered by the Nazis and Soviets during the Second World War. Video manipulation has also been a propaganda tool, and one notable example of what could be a forerunner to a “deepfake” video was one of German dictator Adolf Hitler that showed him “dancing a jig” after the surrender of France.

In truth Hitler didn’t dance, but rather raised his knee and stomped it down in a rather militaristic style, but careful editing resulted in a more comical move.

Photoshop: the prelude to the Deepfake

The ability to make the impossible seem real is where Hollywood special effects come in, the 1995 film Forrest Gump took this to new extremes when it placed the titular character – played by Oscar-winning actor Tom Hanks – in actual historic videos with real people including President Kennedy and President Johnson, as well as John Lennon. However, the process used by Hollywood in films was still a slow and tedious process.

Photos have become much easier to manipulate thanks to programs such as Photoshop, so much so that edited photos are now dubbed “Photoshopped” whether that program was in fact the one that was used in the digital editing process.

Because the ability to manipulate photos has become so easy, people aren’t accepting of a photo as being real proof. A notable example was a photo that showed then-Presidential candidate John Kerry sitting next to Vietnam War protester Jane Fonda. It didn’t take long for cyber sleuths to determine that the photo was a combination of two other images.

This is why manipulated photos are increasingly being called “cheapfakes,” because almost anyone could merge images together or change the context.

As technology advances, deepfakes are getting harder to spot

The much more worrisome issue has become fake videos, such as the aforementioned one involving the Speaker of the House. This is because simple programs such as FakeApp, which was released in January 2018, can be run on most home desktop computers.

“Fake or manipulated data in print form is bad enough, but with the advanced state of technology, both manipulated photos and video are a reality and becoming much more difficult to identify,” said Jim McGregor, principal analyst at TIRIAS Research.

“They say a photo is worth 1000 words, well a video is probably worth a million, and when you combine this with the potential for going viral through social media, it is a huge issue,” he told ClearanceJobs.

“I do worry that people, and sometimes even news outlets, are willing to accept information without questioning the authentication of it,” McGregor added. “I believe this is a real problem and that the tech industry, not just social media giants, should band together to help prevent it.”

What are the Dangers of Deepfakes?

There are in fact several potential dangers and challenges that are related to the proliferation of deepfake videos.

“First and foremost, the technology needed to construct these images is becoming more available and easy to use, meaning that we’re likely to see an uptick of deepfake videos in the near future,” warned Charles King, principal analyst at Pund-IT.

“Some will be leveraged to gain political advantage – as the recent deepfake video of the House Speaker was meant to do,” King told ClearanceJobs. “But though high profile politicians and celebrities may make the most attractive targets, it won’t be surprising if bad actors use deepfake technology in blackmail schemes and other scams.”

This could present a problem to those with clearance of course. Instead of an actual “honey trap,” videos could be created that just create the illusion that such a transgression by an individual took place.

“But you have to ask yourself what the endgame looks like if trustworthiness hinges on subjective persuasion rather than objectively demonstrated truth,” added King. “These videos could inspire services designed to detect and overthrow deepfakes in much the same way that services exist to clean-up people’s online reputations. They wouldn’t convince everyone. Some dummies will choose to stay dumb no matter what. But they could be invaluable for folks who come under attack personally or professionally.”

The Technology to counter Deepfakes Is still Far off in the distance

In the short term, technology used for nefarious purposes to create these fake videos could come out faster than any technology to detect and counter it.

“Despite their vaunted ‘innovations,’ Facebook, Twitter, Google and others are quickly overwhelmed by the sheer complexity and volume of data flooding in,” noted King. “But systemic cultural behaviors are also hindering effective action. Facebook’s inability to determine whether the Pelosi deepfake was an act of political sabotage or satire was simply pathetic.”

Then there is the issue of when a line in regards to these videos is crossed. What is legitimate satire and what is actually a deliberate act to cast someone in a bad light?

“It is a challenge to do technically, socially, and legally, especially when you consider the First Amendment,” said McGregor. “We live in a world that is becoming increasingly connected and complex. Unfortunately, technology for all its positives is likely to also contribute to the restrictions of freedoms of many and social unrest.”