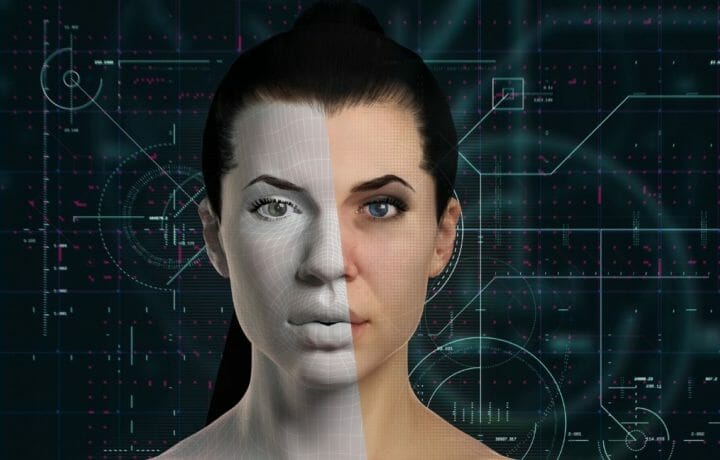

If you tuned into social media recently, you might have caught a video of Tom Cruise swinging a golf club, performing a magic trick, and genuinely acting somewhat goofy. However, there was nothing genuine about it-the video was artificially created and perfected using generative adversarial networks (GAN). This technology combines generating a product from a database of images and videos of a person with a system of checks and balances to improve the quality to the point of passing as real. This process is analogous to the human version of a counterfeiter producing S100.00 bills while the forensic analysis with the magnifying glass and chemistry tools continues to find flaws in them until they are acceptable to pass out to the public.

Bad Intentions Make Deep Fakes a Serious Threat

The creator of the Tom Cruise video, Chris Ume, is a visual effect artist who has combined his talent with deep learning artificial intelligence experts to come up with the multi-disciplinary perfect storm of science and creativity. Unfortunately, Ume’s world of deep fake videos is running parallel to those with malevolent intent. Bot accounts, fake photos, and videos can ruin people’s lives, incite panic, impact public perceptions of current affairs, and even be a threat to national security. Fake videos of politicians acting inappropriately, CEOs predicting financial calamity, and famous people in pornographic scenes are becoming more and more prevalent. This scourge on visual media has been going on for years with the invention of such programs as Photoshop, but the sophistication level has reached immense complexity. In response, laws and legislation have been passed recently to combat the problem.

DoD Budgets for the Fight

Congress included language in the 2021 National Defense Authorization Act that reads as follows:

SEC. 589F. STUDY ON CYBEREXPLOITATION AND ONLINE DECEPTION OF MEMBERS OF THE ARMED FORCES AND THEIR FAMILIES. (a) STUDY.—Not later than 150 days after the date of the enactment of this Act, the Secretary of Defense shall complete a study on…….(8) An intelligence assessment of the threat posed by foreign government and non-state actors creating or using machine-manipulated media (commonly referred to as ‘‘deep fakes’’) featuring members and their families, including generalized assessments of— (A) the maturity of the technology used in the creation of such media; and (B) how such media has been used or might be used to conduct information warfare.

While the above law specifically relates to the protection of uniformed personnel, the research directed will benefit all. The budget of the DoD is ready and willing to “take one for the team” as part of the fight.

State Legislation and Industry Join the Deep Fakes Battle

The Identifying Outputs of Generative Adversarial Networks (IOGAN) Act, recently passed, directs the National Science Foundation and the National Institute for Standards and Technology to support research on manipulated media. At least four states, as of January of this year, have passed similar legislation. Social media sources, such as Facebook, have joined in the fight, spending time and money creating tools to be able to detect and take down deep fake videos in rapid fashion. Universities have numerous research initiatives in progress using AI and human behavior patterns to be able to effectively analyze the material. The game of “cat and mouse” is currently ongoing as the creator tries to outwit the detector, and vice versa.

When we think of cybersecurity, it is often in the context of stealing information or disrupting behavior gained through possessing control of someone’s network. Deep fakes break that paradigm solely as a standalone weapon, but imagine how they could be used in conjunction with a social engineering attack to gain someone’s trust? It is a scenario that demands, and recently received, serious attention.