Intentionally or not, it’s “Artificial Intelligence Week” in Washington. On Monday, the White House released a statement on the subject, declaring it “of paramount importance to maintaining the economic and national security of the United States.” And yesterday, the Department of Defense released an unclassified summary of its own “Defense Artificial Intelligence Strategy” that outlines the department’s basic roadmap for developing AI capabilities and integrating them into defense operations.

It’s not exactly activating Skynet. Yet.

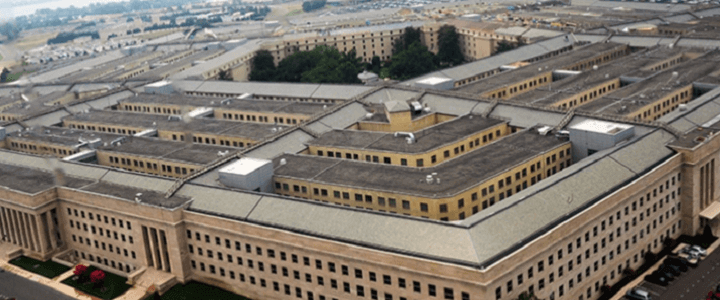

But we seem to be running headlong into a thing we can do without giving much thought into whether we ought to do it. It reminds me of the scene in the HBO movie The Pentagon Wars, which lampoons the development of the Bradley Infantry Fighting Vehicle, where a general asks the design team, “How about some portholes along the side? For individual firearms? So the fellas can stick out their guns and shoot people.” (For the record, that was a bad idea).

The Pentagon is trying to go about this the right way. Last June, the department quietly announced the creation of a Joint Artificial Intelligence Center under the Chief Information Officer, to “pursue AI applications with boldness and alacrity while ensuring strong commitment to military ethics and AI safety.” In December, the Pentagon decided that Air Force Lt. Gen. Jack Shanahan, who had been running Project Maven, would lead this new organization.

The development of an AI strategy is the next logical step in the development of this critical capability.

My fears are somewhat irrational, I know. Like the JAIC memo, the strategy says up front that a major component is to “articulate [the department’s] vision and guiding principles for using AI in a lawful and ethical manner to promote our values.” Like many my age, I worry about removing the “man-in-the-loop.” When making literal life-and-death decisions, which releasing weapons always is, my old-fashioned brain prefers to have a thinking, feeling human making the decision.

This is what frustrated me Google employees pouted and held their breath until the company agreed to drop its participation in Project Maven. The Googlistas were worried about helping bring about more death and destruction when the project would have done the opposite.

Artificial Intelligence done correctly not only relieves the strain on humans, allowing them to devote their mental energy to making ethical decisions rather than on drudgery, it provides additional information critical to making those decisions. Is that person in the video the same person photographed last week with the high-value target? Is he carrying a rifle or a stick? Is he loading a bomb or cans of cooking oil and bags of rice into his car? What is the probability of collateral damage from a strike right now? These are important questions that good AI can answer, making the tough decisions a little easier.

The AI strategy deals with much more than autonomous weapons systems. There are a host of ways machine learning and predictive analytics can aid the military. But I am sure I’m not alone in jumping straight to the worst case scenario when I hear the combination of “advanced artificial intelligence” and “Department of Defense.”

However this effort proceeds, I just hope we don’t build it with portholes.