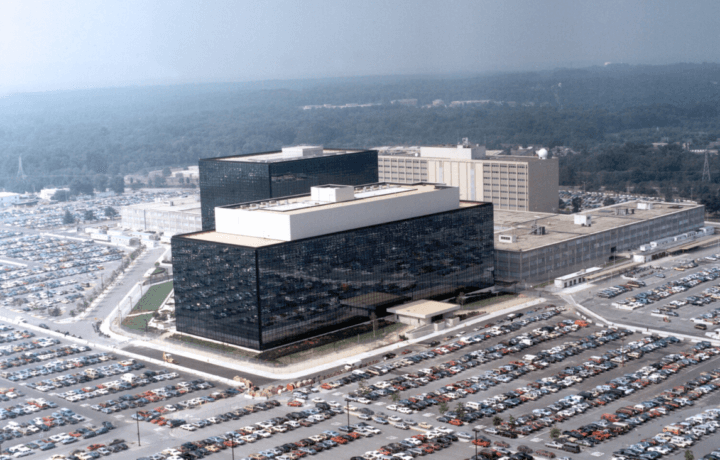

In a very short time, artificial intelligence (AI) has gone from being little more than a buzzword of science fiction to a widely employed technology that could impact the world in still unforeseen ways. It offers countless opportunities but also poses significant threats. To ensure the safe use of AI, the National Security Agency’s (NSA’s) Artificial Intelligence Security Center (AISC) – working with several additional U.S. and foreign agencies – this week released a Cybersecurity Information Sheet (CSI) intended to support National Security System owners and Defense Industrial Base companies that will be deploying and operating AI systems designed and developed by an external entity.

NSA’s Cybersecurity Information Sheet (CSI)

The “Deploying AI Systems Securely: Best Practices for Deploying Secure and Resilient AI Systems” was co-authored by the Cybersecurity and Infrastructure Security Agency (CISA), the Federal Bureau of Investigation (FBI), the Australian Signals Directorate’s Australian Cyber Security Centre (ACSC), the Canadian Centre for Cyber Security (CCCS), the New Zealand National Cyber Security Centre (NCSC-NZ), and the United Kingdom’s National Cyber Security Centre (NCSC-UK).

“As agencies, industry, and academia discover potential weaknesses in AI technology and techniques to exploit them, organizations will need to update their AI systems to address the changing risks, in addition to applying traditional IT best practices to AI systems,” the CSI explained.

It added that the goals of the information sheet further called for efforts to improve the confidentiality, integrity, and availability of AI systems; while assuring that known cybersecurity vulnerabilities in AI systems are appropriately mitigated. It also emphasized the need to provide methodologies and controls to protect, detect, and respond to malicious activity against AI systems and related data and services.

“AI brings unprecedented opportunity, but also can present opportunities for malicious activity. NSA is uniquely positioned to provide cybersecurity guidance, AI expertise, and advanced threat analysis,” said NSA Cybersecurity Director Dave Luber.

It was also noted that not all of the guidelines in the CSI will be directly applicable to all organizations or environments. Instead, it suggested that the level of sophistication and the methods of attack will likely vary depending on the adversary targeting the AI system, so organizations should consider the guidance alongside their use cases and threat profile.

“This joint effort in crafting the new AI guidance report underscores a significant milestone in enhancing the security and reliability of AI systems within national security realms,” suggested Avkash Kathiriya, senior vice president of research and innovation at cybersecurity provider Cyware.

“With a focus on bolstering confidentiality, integrity, and availability of AI systems, as well as mitigating cybersecurity vulnerabilities and providing frameworks for threat detection and response, the report serves as a crucial resource for organizations looking to fortify their AI deployments against malicious activities,” Kathiriya told ClearanceJobs. “Furthermore, by emphasizing the importance of human oversight and training in the AI era, the guidance acknowledges the pivotal role of human operators in ensuring the responsible and effective use of AI technologies in national security operations.”

The Cybersecurity Collaboration Center

The NSA established the AISC in September of 2023 as a part of the Cybersecurity Collaboration Center (CCC) to detect and counter AI vulnerabilities; drive partnerships with industry and experts from U.S. industry, national labs, academia, the intelligence community (IC), the Department of Defense (DoD), and select foreign partners.

It was also created to develop and promote AI security best practices, and to ensure the NSA’s ability to stay in front of adversaries’ tactics and techniques. To achieve those goals, the AISC has announced plans to work with global partners to develop a series of guidance on AI security topics as the field evolves, such as data security, content authenticity, model security, identity management, model testing, and red teaming, incident response, and recovery.

The recent CSI is an example of potential international cooperation, and while it was intended for national security purposes, the guidance has application for anyone bringing AI capabilities into a managed environment, especially those in high-threat, high-value environments. It also builds upon the previously released Guidelines for Secure AI System Development and Engaging with Artificial Intelligence.

Securing the Deployment Environment

The CSI addressed that organizations may typically deploy AI systems within existing IT infrastructure, and suggested that before such deployment, it should be ensured that the IT environment applies sound security principles, including robust governance, a well-designed architecture, and secure configurations.

The paper called for validating the AI system before and during use, as well as actively monitoring model behavior. In addition, many standard cybersecurity practices should be in place, including updating and patching regularly; and preparing for high availability (HA) and disaster recovery (DR).